Machine (re)learning: Neural Networks from scratch

Having recently changed roles, I am now in the enviable position of starting to do some work with machine learning (ML) tools. My undergraduate degree was actually in Artificial Intelligence, but that was over a decade ago, which is a long time in computer science in general, let alone the field of Machine Learning and AI which has progressed massively in the last few years.So needless to say, coming back to it there has been a lot to learn. In the future I will write a bit more about some of the available tools and libraries that exist these days (both expanding on the traditional AI Stanford libraries I have mentioned previously with my tweet sentiment analysis, plus newer frameworks that cover "deep learning").

Anyway, inspired by this post, I thought it would be a fun Sunday night refresher to write my own neural network. The first, and last, time that I wrote a neural network was for my final year dissertation (and that code is long gone), so was writing from first principals. The rest of this post will be a very straight forward introduction to the ideas and the code for a basic single layer neural network with a simple sigmoid activation function. I am training and testing on a very simple labeled data set and with the current configuration it scores 90% on the un-seen classification tests. I am by no means any kind of expert in this field, and there are plenty of resources and papers written by people who are inventing this stuff as we speak, but this will be more the musings of someone re-learning this stuff.

As always, all the code is on GitHub (and, as per my change in roles, this time it is all written in Scala).

A brief overview

The basic idea is that a Neural Network(NN) attempts to mimic the parallel architecture of the human brain. The human brain is a massive network of billions of simple neural cells that are interconnected, and given a stimulus they each either "fire" or don't, and its these firing neural cells and synapses that enable us to learn (excuse my crude explanation, I'm clearly not a biologist..).So that is what we are trying to build: A connected network of neurons that given some stimuli either "fire" or don't.

A Neuron

Ok, so this seems like a sensible place to start, right? We all know what a network is, so what are these nodes in our network that we are connecting? These are the decision points, and in themselves are incredibly simple. They are basically a function that takes n input values and multiplies them by a pre-defined weight (per input), adds a bias and then runs it through an activation function (think of this as our fire/don't-fire function):In terms of our code, this is pretty simple (don't worry about the sigmoid derivative values we are setting, we are just doing this to save time later):

As you can see, the neuron holds the state about the weights of the different inputs (a NN is normally fixed in terms of number of neurons, so once initialised at the start we know that we will get the same number of inputs).

You may have also noticed the first line of the class, where we require an ActivationFunction to be applied - as I mentioned, this is our final processing of the output. In this example I am just using the Sigmoid function:

As you can see, it's a pretty simple function. Much like the brain, the neurons are very simple processors, and its only the combination of them that makes the brain so powerful (or deep learning, for that matter)

The network

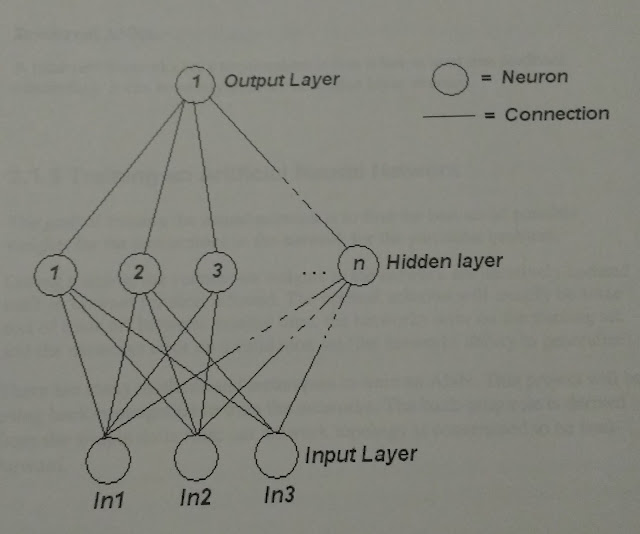

Ok, so a sinple "shallow" NN has an input layer, a single hidden layer and an output layer (deep NNs will have multiple hidden layers). The network is fully connected between layers - that is to say, each node is connected to every node in the next layer up, and thats it - no neurons are connected to other layers and no connections are missed.The exact setup of the network is largely problem dependent, but there are some observations:

- - The input layer has to correlate to your inputs: If you have a data set that has two input values, let's say you have a dataset that contains house price data, and you have the input values number of rooms and square foot then you would have to have two input neurons.

- - Similarly, if you were using the NN for a classification problem and you know you have a fixed number of classifications, the output neurons would correlate to that. For example, consider you were using the NN to recognise hand written digits (0-9) then you would likely have 10 output neurons to group those possible outputs.

The number of hidden neurons, or the number of layers is a lot more problem dependent and is something that needs to be tuned per problem.

If you have ever worked with graph type structures in code before, then setting up a simple network of these neurons is also relatively straight forward given the uniform structure of the network.

The weights

Oh right, yes. So all these neurons are connected, but its a weighted graph, in CS terms. That is, the connection between each node is assigned a weight - as a simple example, if we take the house price dataset as an example, after looking at the data we might determine that the number of rooms is a more significant factor in the end result, so that connection should have a greater weighting than the other input. Now that is not exactly what happens, but in simple terms it's a good way of thinking about it.Given that the end goal is for the NN to learn from the data, it really doesn't matter too much what we initialise the weights for all the connections to, so at start-up we can just assign random values. Just think of our NN as a newborn baby - it has no idea how important different inputs are, or how important the different neural cells that fire in response to the stimuli are - everything is just firing off all over the place as they slowly start to learn!

So what next?

Ok, so we have our super-simple neurons, that just mimic a single brain cell and we have them connected in a structured but randomly weighted fashion, so how does the network learn? Well, this is the interesting bit..When it comes to training the network we need a pretty large dataset to allow it to be able to learn enough to start generalising - but at the same time, we don't want to train it on all the data, as we want to hold some back to test it at the end, just to see just how smart it really is.

In AI terms, we are going to be performing "supervised" learning - this just means that we will train it with a dataset where we know the correct answer, so we can make adjustments based on how well (or badly) the network is doing - this is different to "unsupervised" learning, where we have lots of data, but we don't have the right answer for each data point.

Training: Feed forward

The first step is the "feed-forward" step - this is where we grab the first record from our training data set and feed the inputs into our randomly initialised NN, that input is fed through the all of the neurons in the NN until we get to the output layer and we have the networks attempt at the answer. As the weighting is all random, this is going to be way off (imagine a toddlers guess the first time they ever see a smart phone).

As you can see, the code is really simple, just iterate through the layers calculating the output for each neuron. Now, as this is supervised learning we also have the expected output for the dataset, and this means we can work out how far off the network is and attempt to adjust the weights in the network so that next time it performs a bit better.

Training: Back propogation

This is where the magic, and the maths, comes in. The TL;DR overview for this is basically, we know how wrong the network was in its guess, so we attempt to update each of the connection weights based on that error, and to do so we use differentiation to work out the direction to go to minimise the error.This took a while of me staring at equations and racking my brain trying to remember my A-level maths stuff to get this, and even so, I wouldn't like to have to go back and attempt to explain this to my old maths teacher, but I'm happy I have enough of a grasp of what is going on, having now coded it up.

In a very broad, rough overview, here is what we are going to do now:

- - Cacluate the squared error of the outputs. That is a fairly simple equation of 1/2 * (target - output)^2

- - From there, we will work out the derivative of this function. A lot of the errors we will start to work with now uses differentiation and the derivatives of the result - and this takes a little bit of calculus, but the basic reason is relatively straight forward if you think about what differentiation is for.

- - We work back through the network, calculating the errors for every neuron combining the error, derivatives and the weighting (to determine how much a particular connection played in an error, it also needs to be considered when correcting the weighting.

Once we have adjusted the weight for each connection based on the weight, we start again and run the next training data record. Given the variation in the dataset, the next training record might be quite different to the previous record trained on, so the adjustment might be quite different, so after lots (maybe millions) of iterations over the data, incrementally tweaking and adjusting the weights for the different cases, hopefully it starts to converge on something like a reasonable performance.

Maths fun: Why the derivative of the errors?

So, why is calculating the derivative relevant in adjusting the errors?If you imagine the network as a function of all the different weights, its pretty complicated, but if we were to reduce this, for the sake of easier visualisation, to just be a 3-d space of possible points (e.g. we have just 3 weights to adjust, and those weights are plotted on a 3-d graph) - now imagine our function plots a graph something like this:

(taken from the wikipedia page on partial derivatives)

The derivative of the function allows us to work out the direction of the slope in the graph from a given point, so with our three current weights (co-ordinates) we can use the derivative to work out the direction in which we need to adjust these weights.

Conclusion

The whole NN in scala is currently on Github, and there isn't much more code than I have included here. I have found it pretty helpful to code it up from scratch and actually have to think about it - and has been fun coding it up and seeing it train up to getting 90% accuracy on the unseen data set I was using (just a simple two-in two-out dataset, so not that impressive).Next up I will see how the network performs against the MNIST dataset (like the Hello-World benchmark for machine learning, and is the classification of handwritten digits).

Oh, and if you are interested as to why the images look like a dodgy photocopy, they are photos of original diagrams that I included in my final year dissertation in university, just for old time sake!

.png)

1 comments: